How Eight Things Will Change The Way in Which You Approach Deepseek

What the DeepSeek example illustrates is that this overwhelming give attention to nationwide security-and on compute-limits the space for a real discussion on the tradeoffs of sure governance strategies and the impacts these have in spaces past national security. How did it go from a quant trader’s passion project to one of the most talked-about models within the AI area? As the sector of code intelligence continues to evolve, papers like this one will play an important function in shaping the future of AI-powered instruments for builders and researchers. If I am constructing an AI app with code execution capabilities, comparable to an AI tutor or AI knowledge analyst, E2B's Code Interpreter might be my go-to instrument. I have curated a coveted checklist of open-source tools and frameworks that will assist you craft sturdy and dependable AI purposes. Addressing the mannequin's efficiency and scalability could be vital for wider adoption and real-world purposes. Generalizability: While the experiments demonstrate strong efficiency on the examined benchmarks, it is essential to guage the model's capability to generalize to a wider range of programming languages, coding kinds, and actual-world situations. DeepSeek-V2.5’s architecture contains key improvements, such as Multi-Head Latent Attention (MLA), which considerably reduces the KV cache, thereby bettering inference speed without compromising on model performance.

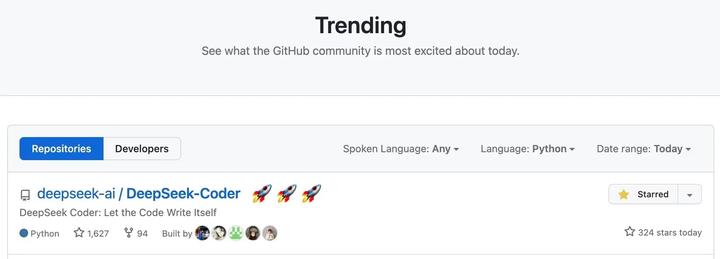

These advancements are showcased by a sequence of experiments and benchmarks, which display the system's robust efficiency in numerous code-associated tasks. The advancements in DeepSeek-V2.5 underscore its progress in optimizing model efficiency and effectiveness, solidifying its place as a leading participant in the AI panorama. OpenAI recently unveiled its newest mannequin, O3, boasting significant developments in reasoning capabilities. The researchers have also explored the potential of DeepSeek-Coder-V2 to push the limits of mathematical reasoning and code generation for giant language models, as evidenced by the associated papers DeepSeekMath: Pushing the limits of Mathematical Reasoning in Open Language and AutoCoder: Enhancing Code with Large Language Models. By enhancing code understanding, generation, and enhancing capabilities, the researchers have pushed the boundaries of what giant language fashions can obtain within the realm of programming and mathematical reasoning. To address this problem, researchers from DeepSeek, Sun Yat-sen University, University of Edinburgh, and MBZUAI have developed a novel method to generate large datasets of synthetic proof information. A state-of-the-artwork AI data center might have as many as 100,000 Nvidia GPUs inside and price billions of dollars.

These advancements are showcased by a sequence of experiments and benchmarks, which display the system's robust efficiency in numerous code-associated tasks. The advancements in DeepSeek-V2.5 underscore its progress in optimizing model efficiency and effectiveness, solidifying its place as a leading participant in the AI panorama. OpenAI recently unveiled its newest mannequin, O3, boasting significant developments in reasoning capabilities. The researchers have also explored the potential of DeepSeek-Coder-V2 to push the limits of mathematical reasoning and code generation for giant language models, as evidenced by the associated papers DeepSeekMath: Pushing the limits of Mathematical Reasoning in Open Language and AutoCoder: Enhancing Code with Large Language Models. By enhancing code understanding, generation, and enhancing capabilities, the researchers have pushed the boundaries of what giant language fashions can obtain within the realm of programming and mathematical reasoning. To address this problem, researchers from DeepSeek, Sun Yat-sen University, University of Edinburgh, and MBZUAI have developed a novel method to generate large datasets of synthetic proof information. A state-of-the-artwork AI data center might have as many as 100,000 Nvidia GPUs inside and price billions of dollars.

Then if you happen to wanna set this up inside the LLM configuration on your net browser, use WebUI. Other folks had been reminded of the appearance of the "personal computer" and the ridicule heaped upon it by the then giants of the computing world, led by IBM and different purveyors of big mainframe computers. If DeepSeek V3, or the same mannequin, was launched with full coaching information and code, as a true open-source language model, then the cost numbers could be true on their face value. As AI ecosystems develop increasingly interconnected, understanding these hidden dependencies becomes essential-not just for security research but also for ensuring AI governance, ethical information use, and accountability in mannequin growth. Pre-trained on nearly 15 trillion tokens, the reported evaluations reveal that the mannequin outperforms different open-source models and rivals main closed-source models. Each model is pre-skilled on repo-stage code corpus by employing a window size of 16K and a extra fill-in-the-blank process, resulting in foundational fashions (DeepSeek-Coder-Base). With Amazon Bedrock Custom Model Import, you'll be able to import DeepSeek-R1-Distill Llama models ranging from 1.5-70 billion parameters.

Imagine, I've to shortly generate a OpenAPI spec, at the moment I can do it with one of many Local LLMs like Llama using Ollama. You may have to have a play around with this one. US-based mostly corporations like OpenAI, Anthropic, and Meta have dominated the field for years. I've been constructing AI functions for the past 4 years and contributing to main AI tooling platforms for some time now. Modern RAG applications are incomplete without vector databases. It may well seamlessly combine with existing Postgres databases. FP16 uses half the reminiscence compared to FP32, which suggests the RAM necessities for FP16 fashions might be approximately half of the FP32 necessities. By activating only the required computational sources for a task, DeepSeek AI provides a value-environment friendly different to conventional models. DeepSeek refers to a brand new set of frontier AI fashions from a Chinese startup of the same title. An AI startup from China, DeepSeek, has upset expectations about how a lot cash is needed to build the latest and biggest AIs. Many are excited by the demonstration that firms can construct strong AI fashions without monumental funding and computing power.

If you loved this article and you also would like to get more info relating to ديب سيك nicely visit our webpage.

Reviews